AI is Here to Stay. How do we Govern It?

By Bill Brink

Before Pandora’s box was an idiom, it was, well, a box–actually, a jar, in the original Greek tale. Told never to open it, Pandora couldn’t resist, unleashing a torrent of evil and misery upon the world. But what gets lost in the phrase’s modern usage is the box’s final ingredient: hope.

What computer scientists, technologists, policy makers and ethicists must do now, in an age where artificial intelligence is inextricably intertwined with our everyday lives and growing more powerful by the month, is flip the idiom on its head: Proceed in such a way that the hope AI represents can escape the box and be brought to bear against the problems of our society while its risks remain squashed inside, marginalized and mitigated. And the hope has never been greater.

“We sometimes joke about really smart people, 'They’re going to cure cancer someday,'” said Martial Hebert, the Dean of Carnegie Mellon University’s School of Computer Science. “Well, these people working on AI are going to cure cancer. All the main human diseases, I totally believe, are going to be cured by giant computer clusters, doing large-scale machine learning and related techniques. And we’re just scratching the surface now.”

But who makes the rules? Who has jurisdiction? Do we need a new Cabinet department or can we bake it into existing government agencies?

The European Union took the lead by passing the AI Act, the world’s first comprehensive law governing AI. U.S. states are introducing bills regulating aspects of AI, such as deepfakes, privacy, generative AI and elections. In October, the White House issued a broad AI executive order that provided for monumental innovation and addressed security, privacy, equity, workplace disruption and deepfakes. The bipartisan Future of AI Innovation Act, introduced in the U.S. Senate in April, aims to promote American leadership in AI development via partnerships between government, business, civil society, and academia, and the Secure Artificial Intelligence Act, introduced in the Senate in May, seeks to improve the sharing of information regarding security risks and vulnerability reporting.

Senate Majority Leader Chuck Schumer has made a point of educating lawmakers about the benefits and risks of AI; in March, he announced $10 million in funding for the National Institute of Standards and Technology (NIST) to support the recently established U.S. AI Safety Institute.

While governing something in which very little is clear, one thing is.

“Don’t regulate the technology,” said Ramayya Krishnan, the Dean of CMU’s Heinz College of Information Systems and Public Policy, “because the technology will evolve.”

What are we regulating?

Artificial intelligence has existed in some form for decades, but advances in computing power have accelerated both its capabilities and the conversations about its risks. The recent improvement of generative AI tools like ChatGPT, DALL-E and Synthesia, which can create text, images and video with startling realism, increased the calls for governance. Silicon Valley and lawmakers generally agree on the pillars of this governance: AI models should be safe, fair, private, explainable, transparent and accurate.

We have mechanisms in place to investigate and punish those who commit crimes. Whether you rob a bank with a ski mask or AI-generated polymorphic malware, the FBI still has jurisdiction. But can the current institutions keep up with this rapidly changing technology, or do we need a central clearinghouse?

Both. There are more than 400 federal agencies. If, say, the European Union or Red Cross is attempting to liaise with the U.S. government on AI, it can’t do so across hundreds of different bureaucratic institutions.

“You do need to boost capability in oversight,” Krishnan said. “The Equal Employment Opportunity Commission has to have AI oversight capability. The Consumer Financial Protection Bureau has to have AI oversight capability. In other words, each of these executive agencies that have regulatory authority in specific sectors needs to have the capability to govern use cases where AI is used. In addition, we need crosswalks between frameworks in use in the U.S., such as the NIST AI Risk Management Framework, and frameworks developed and deployed in other jurisdictions to enable international collaboration and cooperation.”

“Real-world risks and impacts at the problem formulation stage”

NIST plays an important role in any conversation regarding AI and its risks. Within NIST, Reva Schwartz, the institute’s Principal Investigator for AI Bias, bridges the gap between algorithms and humanity. She contributed to the creation of NIST’s AI Risk Management Framework and studies the field from a socio-technical perspective, asking questions about AI’s safety and impact on people.

The responsible development and deployment of AI goes beyond making sure the model works. Ideally, the system will be accurate, and not prone to hallucination as some generative AI models are. It will be fair, especially when its outputs lead to a significant impact on people’s lives as in the cases of criminal sentencing and loan approval. It will be explainable and transparent: How did it reach its conclusion? It will not reveal identifiable personal information that may have been included in the information used to train it.

“Currently, people tend to look at the AI lifecycle as just the quantitative aspects of the system,” Schwartz said. “But there’s so much more to AI than the data, model and algorithms. To build AI responsibly, teams can start by considering real-world risks and impacts at the problem formulation stage.”

By including safe and responsible AI practices during the model's creation rather than slapping them on after the fact, organizations can use these practices to gain a leg up in the market.

“In practice, promoting these principles often not only doesn’t hurt innovation and economic growth; it actually helps us develop better quality products,” said Hoda Heidari, the K&L Gates Career Development Assistant Professor in Ethics and Computational Technologies at Carnegie Mellon and co-lead of CMU’s Responsible AI initiative, housed in the Block Center for Technology and Society.

The aviation corollary

Hebert and Schwartz both compared the governance of AI to that of air travel, and the allegory works on two levels. Passengers don’t need to know how the airplane works to fly; they only have to trust that the pilots do, and that they will operate the plane properly and within the rules. Safe air travel is also made possible by the agreement between airlines and manufacturers to share details of any incident, no matter how small, that the entire community can learn from.

While decades of air travel records exist, we’re too early in the development of AI–or generative AI, at least–for that kind of catalog.

“Something that I’ve been negatively surprised with is how haphazard some of the practices around AI are,” Heidari said. “This is not a fully mature technology. We don’t currently have well-established standards and best practices. And as a result, when you don’t have that frame of reference, it is very easy for it to be misused and abused, even with no bad intention.”

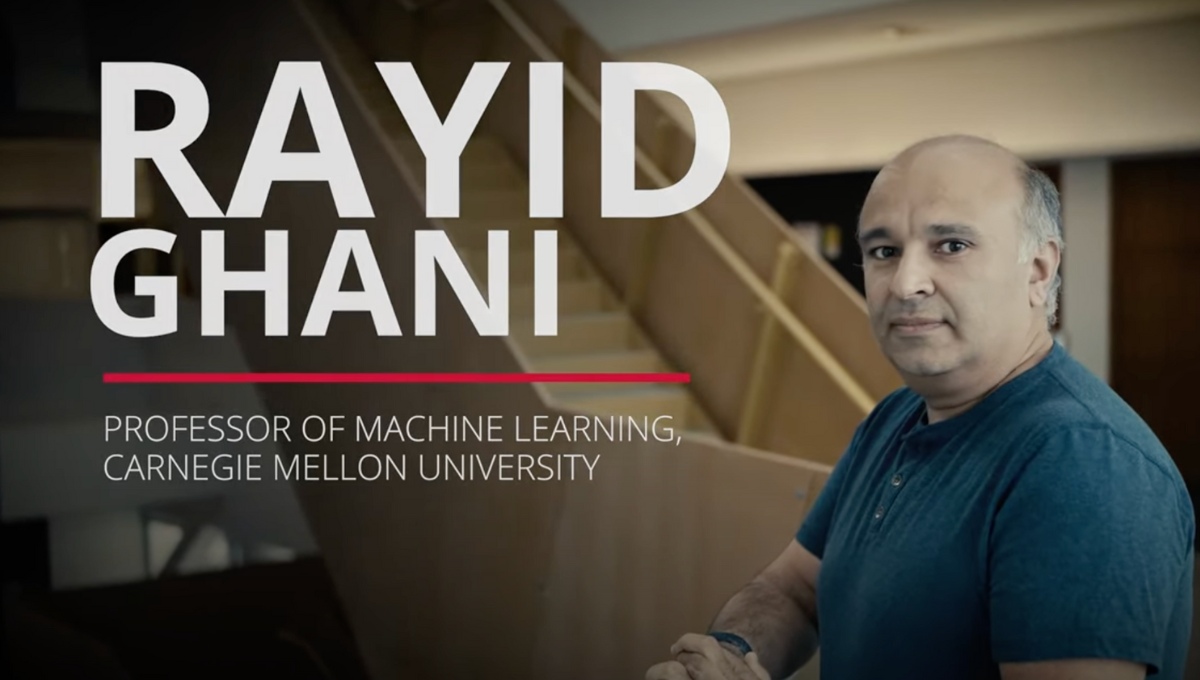

Heidari recently contributed to a report on the safety of advanced AI from the AI Seoul Summit, a joint effort between South Korea and the United Kingdom. Krishnan, Heidari and Professor Rayid Ghani testified before Congress last summer and met with legislators from both parties to educate them on the opportunities and risks of generative AI.

“We’re in the center of the ongoing policy debate in Washington and elsewhere around, how should we regulate this new tool that will likely impact every industry?” said Steve Wray, the executive director of the Block Center. “We’re also in the middle of trying to understand, what does it mean for the future of work?”

Decades of work, from Turing to Minsky to Simon and Newell to Hinton, have led us to this point. Lawmakers the world over, from the EU to Capitol Hill to state houses, must ensure we harness the hope of AI, for the greater good, and slam the lid shut on the rest.